90 Digital CEO Nick Garner talks about trust optimisation and why you will get higher click through rates in this edition of CalvinAyre.com’s SEO Tip of the Week.

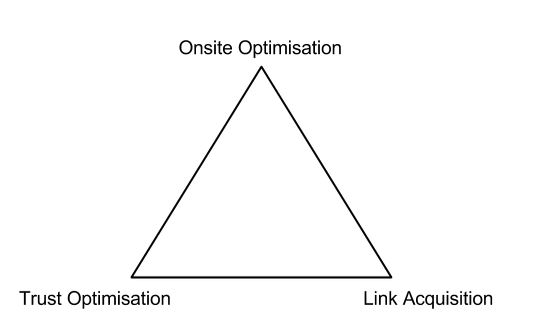

It’s a mental framework covering a basket of factors which are neither link acquisition or onsite optimisation.

If you trust optimize your website, you will get higher click through rates which then should reflect well on Google search results. When doing SEO projects, I suggest you think about three things: onsite optimisation, link acquisition, trust optimisation.

Rationale for Trust Optimisation

Rand Fishkin from Moz recently did an experiment where he did a longtail search query ‘IMEC Lab’. His post ranked 7th. He then asked his Twitter followers to go and click on his post in this set of search results. After 228 visits from Google, mostly through this search query, Rand checked again and his search listing was number one. Source: http://moz.com/rand/queries-clicks-influence-googles-results

Another was in negative SEO

Bartosz Goralewicz did an experiment where he created a bot designed to click on every search result except for his own, which was ranking number 1 for keyword ‘penguin 3.0’ .

The bot got to work, clicking heavily all around his search result. Upshot was his click through rate dropped massively relative to the position he was in and his rankings tumbled. Interestingly, when you look at the charts, rankings dropped then the placement was auditioned for short time, then it failed the audition and the page dropped again.

https://goralewicz.co/blog/negative-seo-with-no-backlinks-a-case-study/

This is just a couple of examples of many anecdotal accounts of user engagement affecting rankings either upwards or downwards.

The principal is really simple. Each search position will have an expected click through rate. If the click-through rate is higher or lower than expected, that listing will go either up or down ( discounting other factors of like an influx of pagerank )

Statistical robustness

As you remember from years past, search results would slowly move up or down, therefore were basically static. Now search results shuffle around within a range, which is why Google don’t give you an exact ranking position. Instead they give you an average position for your search ranking.

From a statistical point of view it’s much better to test the same search result in different positions to get a really solid expected click through rate across a number of positions for that piece of content. This way Google can be pretty sure the you are getting the right CTR rates for your search ranking position.

Because Google has to deal with so much scale it makes sense for them to focus on a simple signal like click through rates and bounce rates back to search results . You can see it’s a good proxy for whether people like a website or not.

For example.

To show you what I mean, imagine you have an affiliate who dumps a huge number of links into a page. The rush of PageRank will propel that page up in the rankings. However you might have another affiliate who has content people like, this affiliate gets enough links to rank on the homepage and gets better click through rates and engagement, therefore that affiliate will rank better with fewer links overall because he doesn’t have to keep pumping it with more and more Page Rank.

To get engagement metrics where you want them, it begins with either spamming your way in by using lots of click bots or go Amazon Turk. That’s all ok, but it doesn’t really deal with the root issue which is true engagement and trust, which allows you to persuade users to do what you want them to do.

If you’re an operator, it’s for them to join. If you’re an affiliate, its for the user to click through you to the operator and then join. One way or the other, faking trust will not persuade users to roll with your agenda.

Trust

Google published a set of guidelines for their quality raters in 2014. Quality raters are individuals who work in directly for Google that check websites for particular quality signals.

My guess is Google wants to look at the footprint of trusted websites through to untrusted websites so the algo can find easy to use signals, that determine which sites rank or not.

If you believe the click-through idea, it makes sense to focus on better click through rates and engagement as well as other signals that Google look for in their quality rater guidelines 2014.

Trust Optimisation

If you trust optimise your site, you are helping your site to be trusted both by users and search engines. The foundations for this type of optimisation come from google quality rater guidelines.

Repeating: If you’re more trusted, you get better click through rates on serps which help rankings and better conversions from users to improve roi.

Each element is dependent on the next, you have to have keywords on a site and/or a content theme, good links going to that site, and be a website users want.

It’s clear google is now factoring in click through rates on search results. If you’re an unattractive but useful and trusted site you will get the click through rates and then you will hold your rankings/be ranked.

The easiest way rank is to become a trusted site with a purpose, hence the idea of trust optimisation.

In the following series of blog posts and video tips I’ll go through Trust Optimization in depth looking at tools, tips, tricks and solid references to back up my points.

Nick Garner is founder of 90 Digital, the well-known and respected iGaming search marketing agency.

Nick is obsessed with SEO and whatever it takes to rank sustainably on Google.